Challenges with Doing Effective Cyber Resiliency Testing

Ryan Torvik - September 16, 2024 - 6 minute read

Effective Cyber Resiliency Testing

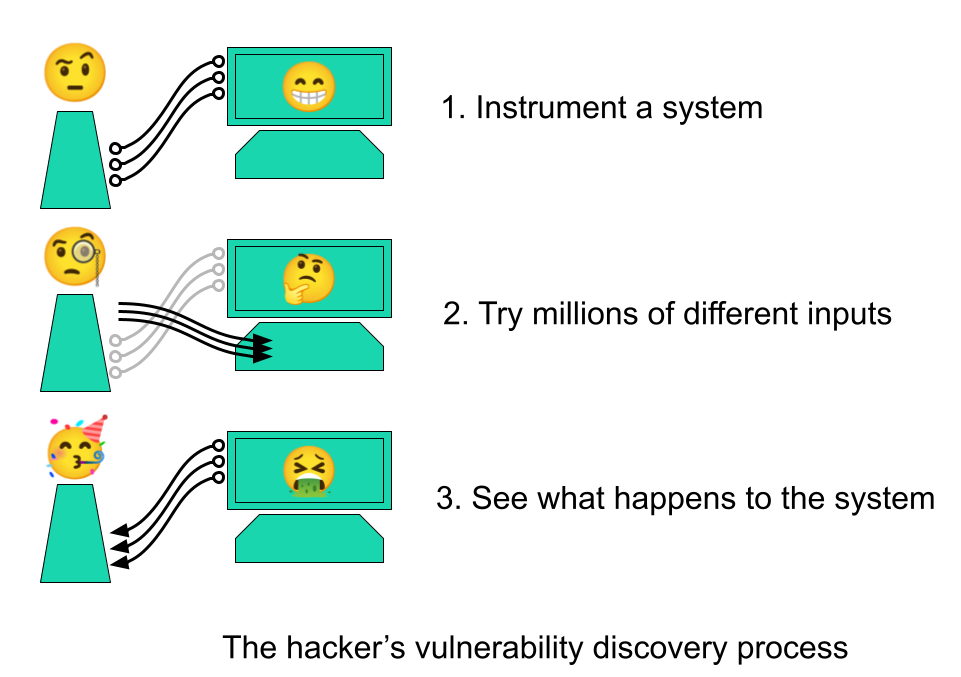

The most effective cyber resiliency testing is done in the same way that hackers search for zero-day vulnerabilities. First, you instrument a target system. Then, you create millions of different input permutations. Finally, you have the target system ingest each input and see how it reacts. Static analysis of the target firmware is used to determine more interesting data combinations and the process is repeated.

An ideal solution is to model an embedded system entirely in software. This makes it easy to introspect, start, stop, and refresh the system under test quickly. A modeling framework that accurately represents how data is manipulated as it moves through the system is best because it allows you to identify when the external data is being used in a vulnerable way. The more different hardware configurations you can model in your framework, the more you can reuse your instrumentation to find similar vulnerabilities on diverse systems. Finally, the more inputs you can in a shorter amount of time, the more vulnerabilities you can find. Scaling your testing efforts becomes increasingly important as the vulnerable conditions become more subtle and difficult to find.

Business Reasons for not doing Cyber Resiliency Testing

Medical device manufacturers are not conducting thorough cyber resiliency testing for several reasons. Regulatory compliance, while increasingly emphasizing cybersecurity, does not mandate specific testing protocols, leading manufacturers to prioritize meeting functionality requirements over cybersecurity. Resource constraints, including budget, expertise, and time limitations, hinder the implementation of robust cybersecurity testing. Since there have been few cyber attacks targeting embedded medical devices, manufacturers may lack awareness of the evolving cybersecurity risks. Legacy systems and interoperability concerns, particularly in the context of complex healthcare ecosystems, can pose challenges to implementing cybersecurity measures. Additionally, market pressures, such as the competitive drive for rapid time-to-market, contribute to a prioritization of product development over comprehensive cybersecurity testing.

Obstacles to Requiring Cyber Resiliency Testing

There are several obstacles to overcome if a federal agency were to require effective cyber resiliency testing of embedded medical devices. There are no existing regulations that define the parameters of thorough cyber resiliency testing. There are also no mature solutions that overcome the technical difficulties of performing thorough cyber resiliency testing of embedded medical devices. Even if the technical difficulties were overcome, there is no actuarial data regarding how much thorough cyber resiliency testing will cost. Finally, there is no way to verify if thorough testing has been conducted. Tulip Tree Technology proposes using our proprietary integrated firmware development environment to determine the cost of thorough cyber resiliency testing and help define what that kind of testing should look like.

Technical Limitations of Doing Thorough Cyber Resiliency Testing

While the aforementioned administrative reasons dissuade organizations from performing cyber resiliency testing, there are a number of technical limitations as well. These limitations are present in 3 major processes used during firmware development. The majority of firmware development is done directly on hardware. There are some companies that use low-level simulators that either model how transistors behave or are clock-cycle accurate with hardware. Others use emulators such as QEMU to rehost and execute firmware, trading hardware interaction fidelity for rapidity of execution . Each of these methods is insufficient for cyber resiliency testing.

| Technical Limitation | On Hardware | In Simulator | In QEMU |

|---|---|---|---|

| Lack of support for the diversity of hardware configurations | Have to purchase new hardware for each product, tooling has to be rewritten for new architectures | Tooling has to be rewritten for new architectures | Adding new models requires a deep understanding of QEMU internals |

| Lack of adequate data transformation analysis | No data transformation analysis | No data transformation analysis | No data transformation analysis built in, add-ons receive low-fidelity information |

| Inability to scale | Have to purchase more hardware to do more parallel tests | Not designed to do distributed testing | Requires significant rework to support parallelized testing efforts |

| Inadequate user interfaces | No user interface | Requires deep understanding of how the hardware works | Command-line interfaces only out of the box, some forks offer GUIs |

Lack of support for the diversity of hardware configurations

Embedded medical devices represent a full spectrum of possible processor architectures and peripheral devices. Whether developing on hardware, using a simulator, or running inside an emulator like QEMU, tools that are used to debug software on one system cannot be used on another without significant modifications. This lack of standardized tooling makes it nearly impossible to perform thorough cyber resilience testing between (or even within) subsets of embedded medical devices without incurring significant additional cost.

Developing models for new hardware configurations in an emulator like QEMU requires a thorough understanding of the internals of the emulator. Configurations are inflexible and difficult to craft correctly. This modeling framework is not optimized to easily create new models to support Lack of adequate data transformation analysis When developing on hardware, it is impossible to do any kind of data transformation analysis during a test. The most a debugging interface will allow you to do is read values of certain areas of the system at the end of a test scenario. Any kind of data flow analysis is unrealistic.

When developing a low-level transistor accurate simulation framework, the system executes too slowly to be useful. It can take upwards of 18 hours to boot Linux. These systems were also not designed to track data as it moves through a system. Adding any kind of data tracing to these types of systems will make them even more unusable for cyber resiliency testing.

Many existing hardware modeling systems are based on the open source QEMU product. This product has focused on getting execution speed as fast as possible. A plethora of forks and add-ons to QEMU have attempted to put in hooks and plugins to be able to track data accurately. These have all struggled to perform accurate data transformation analysis due to the shortcuts that QEMU takes to get fast execution speed.

Inability to scale

In all existing environments, the ability to scale testing efforts was not prioritized. Extra hardware needs to be purchased, set up, and maintained. The complex simulators are often windows desktop applications that prioritize individual user experience over amenability to broad scale testing. QEMU is also meant to be run as a user application compiled specifically for your desktop environment. The difficulty of scaling makes each of the solutions nonoptimal for cyber resiliency testing.

Inadequate user interfaces

Developing directly on hardware requires a significant amount of understanding of how the underlying hardware and electrical components work. Low-level simulators have much better user interfaces, but are still extremely detailed and difficult to use without being an expert. QEMU comes with a command line interface out of the box and requires significant rework and expertise to develop a passable user interface.

Conclusion

Tulip Tree Technology is developing a secure firmware development framework, Emerson, that will make firmware developers write more secure code, faster. Keep following for more details.